JavaAI:LangChain4j学习(一) 集成SpringBoot和阿里通义千问DashScope

LangChain4j集成SpringBoot和阿里通义千问DashScope

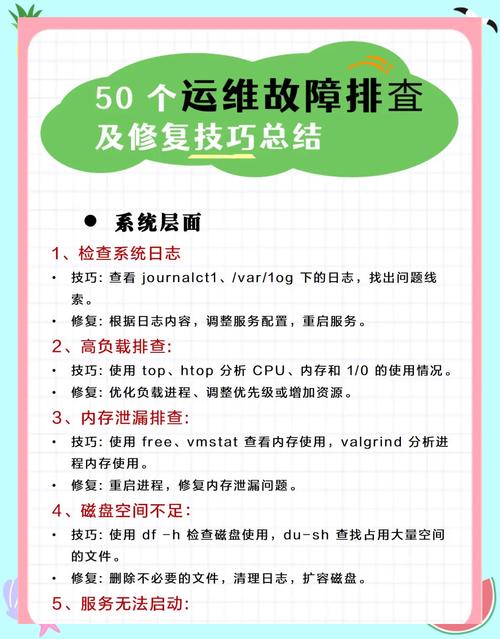

- 使用资源

- 记录

- 不使用Spring Boot ,仅集成

- 集成Spring Boot 启动器

- pom.xml :

- 配置

- 案例使用

使用资源

Langchain4j官方文档

LangChain4j社区DashScope

Langchain4jMaven仓库

阿里通义千问API

记录

本人使用版本: 1.0.0-beta2

不使用Spring Boot ,仅集成

额外:如果不使用SpringBoot,仅集成LangChain4j - DashScope

如果使用的langChain4j-dashScope版本小于等于1.0.0-alpha1

dev.langchain4j langchain4j-dashscope ${previous version here}如果使用的langChain4j-dashScope版本大于等于1.0.0-alpha1

dev.langchain4j langchain4j-community-dashscope ${langchain4j.version}集成Spring Boot 启动器

Spring Boot 启动器有助于创建和配置语言模型、嵌入模型、嵌入存储、 和其他核心 LangChain4j 组件。如果要使用Spring Boot 相关启动器, 需要在pom.xml中引入相应依赖项

pom.xml :

如果使用的langChain4j-dashScope版本小于等于1.0.0-alpha1

dev.langchain4j langchain4j-community-dashscope-spring-boot-starter ${langchain4j.version}如果使用的langChain4j-dashScope版本大于等于1.0.0-alpha1

dev.langchain4j langchain4j-community-dashscope-spring-boot-starter ${latest version here}或者使用 BOM 管理依赖项:

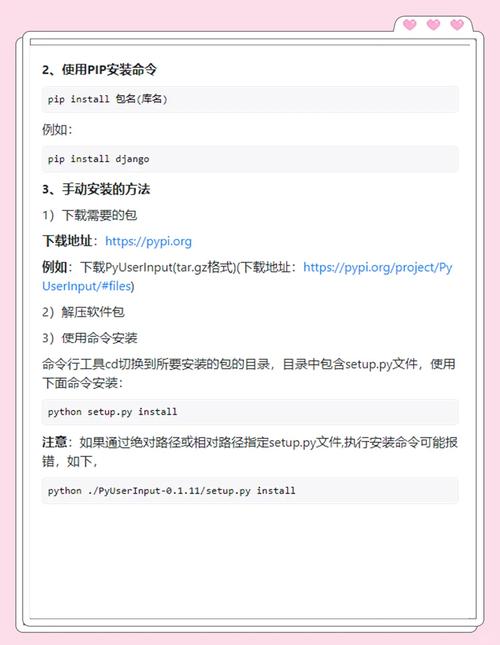

dev.langchain4j langchain4j-community-bom ${latest version here} pom import配置

引入依赖后,在propertity中配置注册

langchain4j.community.dashscope.api-key= langchain4j.community.dashscope.model-name== #是否输出日志 langchain4j.community.dashscope.log-requests=true langchain4j.community.dashscope.log-responses=true #日志等级 logging.level.dev.langchain4j=DEBUG

其他相关参数也可添加,例如 QwenChatModel参数

langchain4j.community.dashscope.temperature=0.7 langchain4j.community.dashscope.max-tokens=4096

官方参数如下:

(图片来源网络,侵删)langchain4j-community-dashscope 有四个model可使用:

QwenChatModel

(图片来源网络,侵删)QwenStreamingChatModel

QwenLanguageModel

(图片来源网络,侵删)QwenStreamingLanguageModel

QwenChatModel 参数如下,其他也相同

Property Description Default Value baseUrl The URL to connect to. You can use HTTP or websocket to connect to DashScope Text Inference and Multi-Modal apiKey The API Key modelName The model to use. qwen-plus topP The probability threshold for kernel sampling controls the diversity of texts generated by the model. the higher the , the more diverse the generated texts, and vice versa. Value range: (0, 1.0]. We generally recommend altering this or temperature but not both.top_p topK The size of the sampled candidate set during the generation process. enableSearch Whether the model uses Internet search results for reference when generating text or not. seed Setting the seed parameter will make the text generation process more deterministic, and is typically used to make the results consistent. repetitionPenalty Repetition in a continuous sequence during model generation. Increasing reduces the repetition in model generation, 1.0 means no penalty. Value range: (0, +inf)repetition_penalty temperature Sampling temperature that controls the diversity of the text generated by the model. the higher the temperature, the more diverse the generated text, and vice versa. Value range: [0, 2) stops With the stop parameter, the model will automatically stop generating text when it is about to contain the specified string or token_id. maxTokens The maximum number of tokens returned by this request. listeners Listeners that listen for request, response and errors. 案例使用

例如 QwenChatModel

ChatLanguageModel qwenModel = QwenChatModel.builder() .apiKey("You API key here") .modelName("qwen-max") .build();

免责声明:我们致力于保护作者版权,注重分享,被刊用文章因无法核实真实出处,未能及时与作者取得联系,或有版权异议的,请联系管理员,我们会立即处理! 部分文章是来自自研大数据AI进行生成,内容摘自(百度百科,百度知道,头条百科,中国民法典,刑法,牛津词典,新华词典,汉语词典,国家院校,科普平台)等数据,内容仅供学习参考,不准确地方联系删除处理! 图片声明:本站部分配图来自人工智能系统AI生成,觅知网授权图片,PxHere摄影无版权图库和百度,360,搜狗等多加搜索引擎自动关键词搜索配图,如有侵权的图片,请第一时间联系我们。