【Node.js】部署与运维

个人主页:Guiat

归属专栏:node.js

文章目录

- 1. Node.js 部署概述

- 1.1 部署的核心要素

- 1.2 Node.js 部署架构全景

- 2. 传统服务器部署

- 2.1 Linux 服务器环境准备

- 系统更新与基础软件安装

- 创建应用用户

- 2.2 应用部署脚本

- 2.3 环境变量管理

- 2.4 Nginx 反向代理配置

- 2.5 SSL 证书配置

- 3. PM2 进程管理

- 3.1 PM2 安装与基本使用

- 3.2 PM2 配置文件

- 3.3 PM2 集群模式

- 3.4 PM2 监控与管理

- 3.5 PM2 日志管理

- 4. Docker 容器化部署

- 4.1 Dockerfile 最佳实践

- 4.2 Docker Compose 配置

- 4.3 健康检查脚本

- 4.4 Docker 部署脚本

- 4.5 Docker 镜像优化

- 5. Kubernetes 部署

- 5.1 Kubernetes 基础配置

- 5.2 Deployment 配置

- 5.3 Service 配置

- 5.4 Ingress 配置

- 5.5 HorizontalPodAutoscaler

- 5.6 Kubernetes 部署流程

- 6. 监控与日志

- 6.1 应用监控

- Prometheus 配置

- Node.js 应用集成 Prometheus

- 6.2 日志管理

- Winston 日志配置

- ELK Stack 配置

- 6.3 APM 监控

- New Relic 集成

- Datadog 集成

- 6.4 健康检查端点

- 7. 性能优化与扩展

- 7.1 负载均衡配置

- Nginx 负载均衡

- HAProxy 配置

- 7.2 缓存策略

- Redis 缓存实现

- 7.3 数据库优化

- MongoDB 优化

- 7.4 性能监控

- 8. 安全与备份

- 8.1 安全配置

- 应用安全中间件

- SSL/TLS 配置

- 8.2 数据备份策略

- MongoDB 备份脚本

- 自动化备份配置

- 8.3 灾难恢复

- 恢复脚本

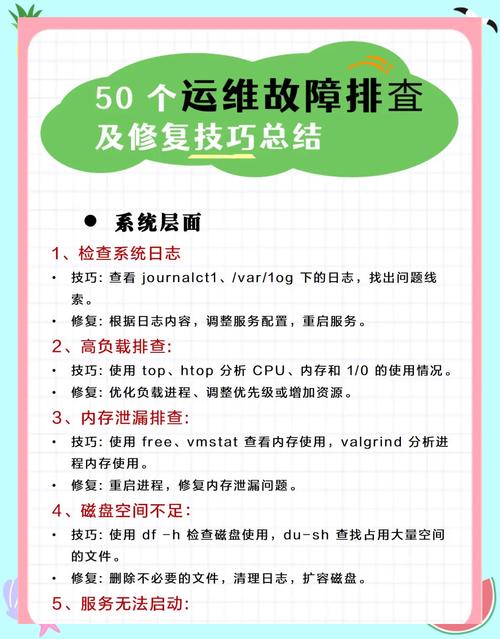

- 9. 故障排查与调试

- 9.1 常见问题诊断

- 内存泄漏检测

- 性能分析

- 9.2 日志分析

- 错误追踪

- 9.3 调试工具

- 远程调试配置

- 调试脚本

- 10. 最佳实践与总结

- 10.1 部署最佳实践

- 部署检查清单

- 环境管理策略

- 10.2 运维自动化

- 自动化部署脚本

- 10.3 监控告警配置

- Prometheus 告警规则

- 告警通知配置

- 10.4 总结

- 关键要点

- 技术选型建议

- 运维成熟度模型

正文

1. Node.js 部署概述

Node.js 应用的部署与运维是将开发完成的应用程序安全、稳定地运行在生产环境中的关键环节。良好的部署策略和运维实践能够确保应用的高可用性、可扩展性和安全性。

1.1 部署的核心要素

- 环境一致性保证

- 应用程序的可靠性和稳定性

- 性能优化和资源管理

- 安全性和访问控制

- 监控和日志管理

- 自动化部署流程

1.2 Node.js 部署架构全景

2. 传统服务器部署

2.1 Linux 服务器环境准备

系统更新与基础软件安装

# Ubuntu/Debian 系统 sudo apt update && sudo apt upgrade -y # 安装必要的软件包 sudo apt install -y curl wget git build-essential # CentOS/RHEL 系统 sudo yum update -y sudo yum install -y curl wget git gcc-c++ make # 安装 Node.js (使用 NodeSource 仓库) curl -fsSL https://deb.nodesource.com/setup_18.x | sudo -E bash - sudo apt-get install -y nodejs # 验证安装 node --version npm --version

创建应用用户

# 创建专用的应用用户 sudo useradd -m -s /bin/bash nodeapp sudo usermod -aG sudo nodeapp # 切换到应用用户 sudo su - nodeapp # 创建应用目录 mkdir -p ~/apps/myapp cd ~/apps/myapp

2.2 应用部署脚本

#!/bin/bash # deploy.sh - 应用部署脚本 set -e APP_NAME="myapp" APP_DIR="/home/nodeapp/apps/$APP_NAME" REPO_URL="https://github.com/username/myapp.git" BRANCH="main" NODE_ENV="production" echo "开始部署 $APP_NAME..." # 创建应用目录 mkdir -p $APP_DIR cd $APP_DIR # 克隆或更新代码 if [ -d ".git" ]; then echo "更新代码..." git fetch origin git reset --hard origin/$BRANCH else echo "克隆代码..." git clone -b $BRANCH $REPO_URL . fi # 安装依赖 echo "安装依赖..." npm ci --production # 构建应用 echo "构建应用..." npm run build # 设置环境变量 echo "设置环境变量..." cp .env.example .env # 根据需要修改 .env 文件 # 重启应用 echo "重启应用..." pm2 restart $APP_NAME || pm2 start ecosystem.config.js echo "部署完成!"2.3 环境变量管理

# .env.production NODE_ENV=production PORT=3000 HOST=0.0.0.0 # 数据库配置 MONGODB_URI=mongodb://localhost:27017/myapp_prod REDIS_URL=redis://localhost:6379 # 安全配置 JWT_SECRET=your_super_secret_jwt_key_here SESSION_SECRET=your_session_secret_here # 第三方服务 SMTP_HOST=smtp.gmail.com SMTP_PORT=587 SMTP_USER=your_email@gmail.com SMTP_PASS=your_app_password # 日志配置 LOG_LEVEL=info LOG_FILE=/var/log/myapp/app.log # 性能配置 MAX_MEMORY=512M CLUSTER_WORKERS=auto

2.4 Nginx 反向代理配置

# /etc/nginx/sites-available/myapp server { listen 80; server_name yourdomain.com www.yourdomain.com; # 重定向到 HTTPS return 301 https://$server_name$request_uri; } server { listen 443 ssl http2; server_name yourdomain.com www.yourdomain.com; # SSL 证书配置 ssl_certificate /etc/letsencrypt/live/yourdomain.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/yourdomain.com/privkey.pem; # SSL 安全配置 ssl_protocols TLSv1.2 TLSv1.3; ssl_ciphers ECDHE-RSA-AES256-GCM-SHA512:DHE-RSA-AES256-GCM-SHA512:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384; ssl_prefer_server_ciphers off; ssl_session_cache shared:SSL:10m; ssl_session_timeout 10m; # 安全头 add_header X-Frame-Options DENY; add_header X-Content-Type-Options nosniff; add_header X-XSS-Protection "1; mode=block"; add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always; # 静态文件处理 location /static/ { alias /home/nodeapp/apps/myapp/public/; expires 1y; add_header Cache-Control "public, immutable"; } # API 代理 location /api/ { proxy_pass http://127.0.0.1:3000; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_cache_bypass $http_upgrade; # 超时设置 proxy_connect_timeout 60s; proxy_send_timeout 60s; proxy_read_timeout 60s; } # 主应用代理 location / { proxy_pass http://127.0.0.1:3000; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_cache_bypass $http_upgrade; } # 健康检查 location /health { access_log off; proxy_pass http://127.0.0.1:3000/health; } }2.5 SSL 证书配置

# 安装 Certbot sudo apt install certbot python3-certbot-nginx # 获取 SSL 证书 sudo certbot --nginx -d yourdomain.com -d www.yourdomain.com # 设置自动续期 sudo crontab -e # 添加以下行 0 12 * * * /usr/bin/certbot renew --quiet

3. PM2 进程管理

3.1 PM2 安装与基本使用

# 全局安装 PM2 npm install -g pm2 # 启动应用 pm2 start app.js --name "myapp" # 查看运行状态 pm2 status # 查看日志 pm2 logs myapp # 重启应用 pm2 restart myapp # 停止应用 pm2 stop myapp # 删除应用 pm2 delete myapp

3.2 PM2 配置文件

// ecosystem.config.js module.exports = { apps: [ { name: 'myapp', script: './src/index.js', instances: 'max', // 或者指定数字,如 4 exec_mode: 'cluster', env: { NODE_ENV: 'development', PORT: 3000 }, env_production: { NODE_ENV: 'production', PORT: 3000, MONGODB_URI: 'mongodb://localhost:27017/myapp_prod' }, // 日志配置 log_file: './logs/combined.log', out_file: './logs/out.log', error_file: './logs/error.log', log_date_format: 'YYYY-MM-DD HH:mm:ss Z', // 自动重启配置 watch: false, ignore_watch: ['node_modules', 'logs'], max_memory_restart: '1G', // 实例配置 min_uptime: '10s', max_restarts: 10, // 健康检查 health_check_grace_period: 3000, // 环境变量 env_file: '.env' } ], deploy: { production: { user: 'nodeapp', host: 'your-server.com', ref: 'origin/main', repo: 'https://github.com/username/myapp.git', path: '/home/nodeapp/apps/myapp', 'post-deploy': 'npm install && npm run build && pm2 reload ecosystem.config.js --env production' } } };3.3 PM2 集群模式

// cluster.config.js module.exports = { apps: [ { name: 'myapp-cluster', script: './src/index.js', instances: 4, // 4个工作进程 exec_mode: 'cluster', // 负载均衡策略 instance_var: 'INSTANCE_ID', // 集群配置 kill_timeout: 5000, listen_timeout: 3000, // 内存和CPU限制 max_memory_restart: '500M', node_args: '--max-old-space-size=512', env_production: { NODE_ENV: 'production', PORT: 3000 } } ] };3.4 PM2 监控与管理

# 实时监控 pm2 monit # 查看详细信息 pm2 show myapp # 重载所有应用(零停机时间) pm2 reload all # 保存当前进程列表 pm2 save # 设置开机自启动 pm2 startup pm2 save # 更新 PM2 pm2 update

3.5 PM2 日志管理

# 查看实时日志 pm2 logs # 查看特定应用日志 pm2 logs myapp # 清空日志 pm2 flush # 日志轮转 pm2 install pm2-logrotate # 配置日志轮转 pm2 set pm2-logrotate:max_size 10M pm2 set pm2-logrotate:retain 30 pm2 set pm2-logrotate:compress true

4. Docker 容器化部署

4.1 Dockerfile 最佳实践

# 多阶段构建 Dockerfile FROM node:18-alpine AS builder # 设置工作目录 WORKDIR /app # 复制 package 文件 COPY package*.json ./ # 安装依赖 RUN npm ci --only=production && npm cache clean --force # 复制源代码 COPY . . # 构建应用 RUN npm run build # 生产阶段 FROM node:18-alpine AS production # 创建非 root 用户 RUN addgroup -g 1001 -S nodejs RUN adduser -S nodeapp -u 1001 # 设置工作目录 WORKDIR /app # 复制构建产物和依赖 COPY --from=builder --chown=nodeapp:nodejs /app/dist ./dist COPY --from=builder --chown=nodeapp:nodejs /app/node_modules ./node_modules COPY --from=builder --chown=nodeapp:nodejs /app/package*.json ./ # 安装 dumb-init RUN apk add --no-cache dumb-init # 切换到非 root 用户 USER nodeapp # 暴露端口 EXPOSE 3000 # 健康检查 HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \ CMD node healthcheck.js # 启动应用 ENTRYPOINT ["dumb-init", "--"] CMD ["node", "dist/index.js"]

4.2 Docker Compose 配置

# docker-compose.yml version: '3.8' services: app: build: context: . dockerfile: Dockerfile target: production image: myapp:latest container_name: myapp restart: unless-stopped ports: - "3000:3000" environment: - NODE_ENV=production - MONGODB_URI=mongodb://mongo:27017/myapp - REDIS_URL=redis://redis:6379 depends_on: mongo: condition: service_healthy redis: condition: service_healthy volumes: - app_logs:/app/logs - app_uploads:/app/uploads networks: - app_network healthcheck: test: ["CMD", "node", "healthcheck.js"] interval: 30s timeout: 10s retries: 3 start_period: 40s mongo: image: mongo:6 container_name: myapp_mongo restart: unless-stopped ports: - "27017:27017" environment: MONGO_INITDB_ROOT_USERNAME: admin MONGO_INITDB_ROOT_PASSWORD: password MONGO_INITDB_DATABASE: myapp volumes: - mongo_data:/data/db - ./mongo-init.js:/docker-entrypoint-initdb.d/mongo-init.js:ro networks: - app_network healthcheck: test: echo 'db.runCommand("ping").ok' | mongosh localhost:27017/test --quiet interval: 30s timeout: 10s retries: 3 start_period: 40s redis: image: redis:7-alpine container_name: myapp_redis restart: unless-stopped ports: - "6379:6379" command: redis-server --appendonly yes --requirepass password volumes: - redis_data:/data networks: - app_network healthcheck: test: ["CMD", "redis-cli", "--raw", "incr", "ping"] interval: 30s timeout: 10s retries: 3 start_period: 40s nginx: image: nginx:alpine container_name: myapp_nginx restart: unless-stopped ports: - "80:80" - "443:443" volumes: - ./nginx.conf:/etc/nginx/nginx.conf:ro - ./ssl:/etc/nginx/ssl:ro - app_static:/var/www/static:ro depends_on: - app networks: - app_network volumes: mongo_data: redis_data: app_logs: app_uploads: app_static: networks: app_network: driver: bridge4.3 健康检查脚本

// healthcheck.js const http = require('http'); const options = { hostname: 'localhost', port: process.env.PORT || 3000, path: '/health', method: 'GET', timeout: 2000 }; const request = http.request(options, (res) => { if (res.statusCode === 200) { process.exit(0); } else { process.exit(1); } }); request.on('error', () => { process.exit(1); }); request.on('timeout', () => { request.destroy(); process.exit(1); }); request.end();4.4 Docker 部署脚本

#!/bin/bash # docker-deploy.sh set -e APP_NAME="myapp" IMAGE_NAME="myapp:latest" CONTAINER_NAME="myapp_container" echo "开始 Docker 部署..." # 构建镜像 echo "构建 Docker 镜像..." docker build -t $IMAGE_NAME . # 停止并删除旧容器 echo "停止旧容器..." docker stop $CONTAINER_NAME 2>/dev/null || true docker rm $CONTAINER_NAME 2>/dev/null || true # 启动新容器 echo "启动新容器..." docker run -d \ --name $CONTAINER_NAME \ --restart unless-stopped \ -p 3000:3000 \ -e NODE_ENV=production \ -v $(pwd)/logs:/app/logs \ $IMAGE_NAME # 等待容器启动 echo "等待容器启动..." sleep 10 # 检查容器状态 if docker ps | grep -q $CONTAINER_NAME; then echo "部署成功! 容器正在运行." docker logs --tail 20 $CONTAINER_NAME else echo "部署失败! 容器未能启动." docker logs $CONTAINER_NAME exit 1 fi4.5 Docker 镜像优化

# 优化后的 Dockerfile FROM node:18-alpine AS base RUN apk add --no-cache dumb-init WORKDIR /app COPY package*.json ./ RUN npm ci --only=production && npm cache clean --force FROM base AS build RUN npm ci COPY . . RUN npm run build FROM base AS runtime COPY --from=build /app/dist ./dist RUN addgroup -g 1001 -S nodejs && adduser -S nodeapp -u 1001 USER nodeapp EXPOSE 3000 HEALTHCHECK --interval=30s CMD node healthcheck.js ENTRYPOINT ["dumb-init", "--"] CMD ["node", "dist/index.js"]

5. Kubernetes 部署

5.1 Kubernetes 基础配置

# namespace.yaml apiVersion: v1 kind: Namespace metadata: name: myapp labels: name: myapp# configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: myapp-config namespace: myapp data: NODE_ENV: "production" PORT: "3000" LOG_LEVEL: "info"

# secret.yaml apiVersion: v1 kind: Secret metadata: name: myapp-secrets namespace: myapp type: Opaque data: # base64 编码的值 MONGODB_URI: bW9uZ29kYjovL21vbmdvOjI3MDE3L215YXBw JWT_SECRET: eW91cl9qd3Rfc2VjcmV0X2hlcmU= REDIS_URL: cmVkaXM6Ly9yZWRpczozNjM3OQ==

5.2 Deployment 配置

# deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myapp-deployment namespace: myapp labels: app: myapp spec: replicas: 3 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp image: myapp:latest ports: - containerPort: 3000 env: - name: NODE_ENV valueFrom: configMapKeyRef: name: myapp-config key: NODE_ENV - name: PORT valueFrom: configMapKeyRef: name: myapp-config key: PORT - name: MONGODB_URI valueFrom: secretKeyRef: name: myapp-secrets key: MONGODB_URI - name: JWT_SECRET valueFrom: secretKeyRef: name: myapp-secrets key: JWT_SECRET resources: requests: memory: "256Mi" cpu: "250m" limits: memory: "512Mi" cpu: "500m" livenessProbe: httpGet: path: /health port: 3000 initialDelaySeconds: 30 periodSeconds: 10 timeoutSeconds: 5 failureThreshold: 3 readinessProbe: httpGet: path: /ready port: 3000 initialDelaySeconds: 5 periodSeconds: 5 timeoutSeconds: 3 failureThreshold: 3 volumeMounts: - name: app-logs mountPath: /app/logs volumes: - name: app-logs emptyDir: {} imagePullSecrets: - name: regcred5.3 Service 配置

# service.yaml apiVersion: v1 kind: Service metadata: name: myapp-service namespace: myapp labels: app: myapp spec: selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 3000 type: ClusterIP5.4 Ingress 配置

# ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: myapp-ingress namespace: myapp annotations: kubernetes.io/ingress.class: nginx cert-manager.io/cluster-issuer: letsencrypt-prod nginx.ingress.kubernetes.io/rate-limit: "100" nginx.ingress.kubernetes.io/rate-limit-window: "1m" spec: tls: - hosts: - yourdomain.com secretName: myapp-tls rules: - host: yourdomain.com http: paths: - path: / pathType: Prefix backend: service: name: myapp-service port: number: 805.5 HorizontalPodAutoscaler

# hpa.yaml apiVersion: autoscaling/v2 kind: HorizontalPodAutoscaler metadata: name: myapp-hpa namespace: myapp spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: myapp-deployment minReplicas: 2 maxReplicas: 10 metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 70 - type: Resource resource: name: memory target: type: Utilization averageUtilization: 80 behavior: scaleDown: stabilizationWindowSeconds: 300 policies: - type: Percent value: 10 periodSeconds: 60 scaleUp: stabilizationWindowSeconds: 0 policies: - type: Percent value: 100 periodSeconds: 15 - type: Pods value: 4 periodSeconds: 15 selectPolicy: Max5.6 Kubernetes 部署流程

6. 监控与日志

6.1 应用监控

Prometheus 配置

# prometheus.yml global: scrape_interval: 15s evaluation_interval: 15s rule_files: - "rules/*.yml" scrape_configs: - job_name: 'myapp' static_configs: - targets: ['localhost:3000'] metrics_path: '/metrics' scrape_interval: 5s - job_name: 'node-exporter' static_configs: - targets: ['localhost:9100'] alerting: alertmanagers: - static_configs: - targets: - alertmanager:9093Node.js 应用集成 Prometheus

// metrics.js const promClient = require('prom-client'); // 创建注册表 const register = new promClient.Registry(); // 添加默认指标 promClient.collectDefaultMetrics({ register, prefix: 'myapp_' }); // 自定义指标 const httpRequestDuration = new promClient.Histogram({ name: 'myapp_http_request_duration_seconds', help: 'HTTP request duration in seconds', labelNames: ['method', 'route', 'status_code'], buckets: [0.1, 0.5, 1, 2, 5] }); const httpRequestTotal = new promClient.Counter({ name: 'myapp_http_requests_total', help: 'Total number of HTTP requests', labelNames: ['method', 'route', 'status_code'] }); const activeConnections = new promClient.Gauge({ name: 'myapp_active_connections', help: 'Number of active connections' }); // 注册指标 register.registerMetric(httpRequestDuration); register.registerMetric(httpRequestTotal); register.registerMetric(activeConnections); // 中间件 const metricsMiddleware = (req, res, next) => { const start = Date.now(); res.on('finish', () => { const duration = (Date.now() - start) / 1000; const route = req.route ? req.route.path : req.path; httpRequestDuration .labels(req.method, route, res.statusCode) .observe(duration); httpRequestTotal .labels(req.method, route, res.statusCode) .inc(); }); next(); }; module.exports = { register, metricsMiddleware, activeConnections };6.2 日志管理

Winston 日志配置

// logger.js const winston = require('winston'); const path = require('path'); // 自定义日志格式 const logFormat = winston.format.combine( winston.format.timestamp({ format: 'YYYY-MM-DD HH:mm:ss' }), winston.format.errors({ stack: true }), winston.format.json() ); // 创建 logger const logger = winston.createLogger({ level: process.env.LOG_LEVEL || 'info', format: logFormat, defaultMeta: { service: 'myapp', version: process.env.APP_VERSION || '1.0.0' }, transports: [ // 错误日志 new winston.transports.File({ filename: path.join(__dirname, '../logs/error.log'), level: 'error', maxsize: 10485760, // 10MB maxFiles: 5, tailable: true }), // 组合日志 new winston.transports.File({ filename: path.join(__dirname, '../logs/combined.log'), maxsize: 10485760, // 10MB maxFiles: 5, tailable: true }), // 控制台输出 new winston.transports.Console({ format: winston.format.combine( winston.format.colorize(), winston.format.simple() ) }) ] }); // 请求日志中间件 const requestLogger = (req, res, next) => { const start = Date.now(); res.on('finish', () => { const duration = Date.now() - start; logger.info('HTTP Request', { method: req.method, url: req.url, statusCode: res.statusCode, duration: `${duration}ms`, userAgent: req.get('User-Agent'), ip: req.ip, userId: req.user?.id }); }); next(); }; module.exports = { logger, requestLogger };ELK Stack 配置

# docker-compose.elk.yml version: '3.8' services: elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch:8.8.0 container_name: elasticsearch environment: - discovery.type=single-node - "ES_JAVA_OPTS=-Xms512m -Xmx512m" - xpack.security.enabled=false ports: - "9200:9200" volumes: - elasticsearch_data:/usr/share/elasticsearch/data logstash: image: docker.elastic.co/logstash/logstash:8.8.0 container_name: logstash ports: - "5044:5044" volumes: - ./logstash.conf:/usr/share/logstash/pipeline/logstash.conf depends_on: - elasticsearch kibana: image: docker.elastic.co/kibana/kibana:8.8.0 container_name: kibana ports: - "5601:5601" environment: - ELASTICSEARCH_HOSTS=http://elasticsearch:9200 depends_on: - elasticsearch volumes: elasticsearch_data:# logstash.conf input { beats { port => 5044 } } filter { if [fields][service] == "myapp" { json { source => "message" } date { match => [ "timestamp", "yyyy-MM-dd HH:mm:ss" ] } mutate { remove_field => [ "message", "host", "agent", "ecs", "log", "input" ] } } } output { elasticsearch { hosts => ["elasticsearch:9200"] index => "myapp-logs-%{+YYYY.MM.dd}" } }6.3 APM 监控

New Relic 集成

// newrelic.js 'use strict'; exports.config = { app_name: ['MyApp'], license_key: process.env.NEW_RELIC_LICENSE_KEY, logging: { level: 'info' }, allow_all_headers: true, attributes: { exclude: [ 'request.headers.cookie', 'request.headers.authorization', 'request.headers.proxyAuthorization', 'request.headers.setCookie*', 'request.headers.x*', 'response.headers.cookie', 'response.headers.authorization', 'response.headers.proxyAuthorization', 'response.headers.setCookie*', 'response.headers.x*' ] } };Datadog 集成

// app.js const tracer = require('dd-trace').init({ service: 'myapp', env: process.env.NODE_ENV, version: process.env.APP_VERSION }); const express = require('express'); const app = express(); // Datadog 中间件 app.use((req, res, next) => { const span = tracer.scope().active(); if (span) { span.setTag('user.id', req.user?.id); span.setTag('http.route', req.route?.path); } next(); });6.4 健康检查端点

// health.js const mongoose = require('mongoose'); const redis = require('redis'); const healthCheck = async (req, res) => { const health = { status: 'ok', timestamp: new Date().toISOString(), uptime: process.uptime(), version: process.env.APP_VERSION || '1.0.0', checks: {} }; try { // 数据库连接检查 if (mongoose.connection.readyState === 1) { health.checks.database = { status: 'ok' }; } else { health.checks.database = { status: 'error', message: 'Database not connected' }; health.status = 'error'; } // Redis 连接检查 const redisClient = redis.createClient(); try { await redisClient.ping(); health.checks.redis = { status: 'ok' }; await redisClient.quit(); } catch (error) { health.checks.redis = { status: 'error', message: error.message }; health.status = 'error'; } // 内存使用检查 const memUsage = process.memoryUsage(); health.checks.memory = { status: 'ok', usage: { rss: `${Math.round(memUsage.rss / 1024 / 1024)}MB`, heapTotal: `${Math.round(memUsage.heapTotal / 1024 / 1024)}MB`, heapUsed: `${Math.round(memUsage.heapUsed / 1024 / 1024)}MB` } }; const statusCode = health.status === 'ok' ? 200 : 503; res.status(statusCode).json(health); } catch (error) { res.status(503).json({ status: 'error', message: error.message, timestamp: new Date().toISOString() }); } }; module.exports = { healthCheck };7. 性能优化与扩展

7.1 负载均衡配置

Nginx 负载均衡

# nginx.conf upstream myapp_backend { least_conn; server 127.0.0.1:3001 weight=3 max_fails=3 fail_timeout=30s; server 127.0.0.1:3002 weight=3 max_fails=3 fail_timeout=30s; server 127.0.0.1:3003 weight=2 max_fails=3 fail_timeout=30s; server 127.0.0.1:3004 backup; } server { listen 80; server_name yourdomain.com; # 连接限制 limit_conn_zone $binary_remote_addr zone=conn_limit_per_ip:10m; limit_req_zone $binary_remote_addr zone=req_limit_per_ip:10m rate=5r/s; location / { limit_conn conn_limit_per_ip 10; limit_req zone=req_limit_per_ip burst=10 nodelay; proxy_pass http://myapp_backend; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_cache_bypass $http_upgrade; # 超时设置 proxy_connect_timeout 5s; proxy_send_timeout 60s; proxy_read_timeout 60s; # 缓存设置 proxy_cache my_cache; proxy_cache_valid 200 302 10m; proxy_cache_valid 404 1m; } }HAProxy 配置

# haproxy.cfg global daemon maxconn 4096 log stdout local0 defaults mode http timeout connect 5000ms timeout client 50000ms timeout server 50000ms option httplog frontend myapp_frontend bind *:80 default_backend myapp_backend backend myapp_backend balance roundrobin option httpchk GET /health server app1 127.0.0.1:3001 check server app2 127.0.0.1:3002 check server app3 127.0.0.1:3003 check server app4 127.0.0.1:3004 check backup7.2 缓存策略

Redis 缓存实现

// cache.js const redis = require('redis'); const client = redis.createClient({ url: process.env.REDIS_URL }); client.on('error', (err) => { console.error('Redis Client Error', err); }); client.connect(); // 缓存中间件 const cacheMiddleware = (duration = 300) => { return async (req, res, next) => { if (req.method !== 'GET') { return next(); } const key = `cache:${req.originalUrl}`; try { const cached = await client.get(key); if (cached) { return res.json(JSON.parse(cached)); } // 重写 res.json 方法 const originalJson = res.json; res.json = function(data) { // 缓存响应数据 client.setEx(key, duration, JSON.stringify(data)); return originalJson.call(this, data); }; next(); } catch (error) { console.error('Cache error:', error); next(); } }; }; // 缓存失效 const invalidateCache = async (pattern) => { try { const keys = await client.keys(pattern); if (keys.length > 0) { await client.del(keys); } } catch (error) { console.error('Cache invalidation error:', error); } }; module.exports = { client, cacheMiddleware, invalidateCache };7.3 数据库优化

MongoDB 优化

// database.js const mongoose = require('mongoose'); // 连接配置 const connectDB = async () => { try { const conn = await mongoose.connect(process.env.MONGODB_URI, { useNewUrlParser: true, useUnifiedTopology: true, maxPoolSize: 10, // 连接池大小 serverSelectionTimeoutMS: 5000, socketTimeoutMS: 45000, bufferCommands: false, bufferMaxEntries: 0 }); console.log(`MongoDB Connected: ${conn.connection.host}`); } catch (error) { console.error('Database connection error:', error); process.exit(1); } }; // 索引创建 const createIndexes = async () => { try { // 用户集合索引 await mongoose.connection.db.collection('users').createIndexes([ { key: { email: 1 }, unique: true }, { key: { username: 1 }, unique: true }, { key: { createdAt: -1 } } ]); // 产品集合索引 await mongoose.connection.db.collection('products').createIndexes([ { key: { name: 'text', description: 'text' } }, { key: { category: 1, price: 1 } }, { key: { createdAt: -1 } } ]); console.log('Database indexes created'); } catch (error) { console.error('Index creation error:', error); } }; module.exports = { connectDB, createIndexes };7.4 性能监控

8. 安全与备份

8.1 安全配置

应用安全中间件

// security.js const helmet = require('helmet'); const rateLimit = require('express-rate-limit'); const mongoSanitize = require('express-mongo-sanitize'); const xss = require('xss-clean'); // 安全配置 const configureSecurity = (app) => { // 设置安全头 app.use(helmet({ contentSecurityPolicy: { directives: { defaultSrc: ["'self'"], styleSrc: ["'self'", "'unsafe-inline'"], scriptSrc: ["'self'"], imgSrc: ["'self'", "data:", "https:"], }, }, hsts: { maxAge: 31536000, includeSubDomains: true, preload: true } })); // 速率限制 const limiter = rateLimit({ windowMs: 15 * 60 * 1000, // 15分钟 max: 100, // 限制每个IP 100个请求 message: { error: 'Too many requests, please try again later.' }, standardHeaders: true, legacyHeaders: false, }); app.use('/api/', limiter); // 严格的认证限制 const authLimiter = rateLimit({ windowMs: 60 * 60 * 1000, // 1小时 max: 5, // 限制每个IP 5次尝试 skipSuccessfulRequests: true, }); app.use('/api/auth/login', authLimiter); // 防止 NoSQL 注入 app.use(mongoSanitize()); // 防止 XSS 攻击 app.use(xss()); }; module.exports = { configureSecurity };SSL/TLS 配置

// ssl-server.js const https = require('https'); const fs = require('fs'); const express = require('express'); const app = express(); // SSL 证书配置 const sslOptions = { key: fs.readFileSync('/path/to/private-key.pem'), cert: fs.readFileSync('/path/to/certificate.pem'), ca: fs.readFileSync('/path/to/ca-certificate.pem'), // 可选 // 安全配置 secureProtocol: 'TLSv1_2_method', ciphers: [ 'ECDHE-RSA-AES256-GCM-SHA512', 'DHE-RSA-AES256-GCM-SHA512', 'ECDHE-RSA-AES256-GCM-SHA384', 'DHE-RSA-AES256-GCM-SHA384', 'ECDHE-RSA-AES256-SHA384' ].join(':'), honorCipherOrder: true }; // 创建 HTTPS 服务器 const server = https.createServer(sslOptions, app); server.listen(443, () => { console.log('HTTPS Server running on port 443'); });8.2 数据备份策略

MongoDB 备份脚本

#!/bin/bash # mongodb-backup.sh set -e # 配置 DB_NAME="myapp" BACKUP_DIR="/backup/mongodb" DATE=$(date +%Y%m%d_%H%M%S) BACKUP_NAME="mongodb_backup_${DATE}" RETENTION_DAYS=7 # 创建备份目录 mkdir -p $BACKUP_DIR # 执行备份 echo "开始备份 MongoDB 数据库: $DB_NAME" mongodump --db $DB_NAME --out $BACKUP_DIR/$BACKUP_NAME # 压缩备份 echo "压缩备份文件..." tar -czf $BACKUP_DIR/${BACKUP_NAME}.tar.gz -C $BACKUP_DIR $BACKUP_NAME rm -rf $BACKUP_DIR/$BACKUP_NAME # 清理旧备份 echo "清理 $RETENTION_DAYS 天前的备份..." find $BACKUP_DIR -name "mongodb_backup_*.tar.gz" -mtime +$RETENTION_DAYS -delete # 上传到云存储 (可选) if [ ! -z "$AWS_S3_BUCKET" ]; then echo "上传备份到 S3..." aws s3 cp $BACKUP_DIR/${BACKUP_NAME}.tar.gz s3://$AWS_S3_BUCKET/mongodb-backups/ fi echo "备份完成: ${BACKUP_NAME}.tar.gz"自动化备份配置

# 添加到 crontab # 每天凌晨 2 点执行备份 0 2 * * * /path/to/mongodb-backup.sh >> /var/log/mongodb-backup.log 2>&1 # 每周日凌晨 3 点执行完整备份 0 3 * * 0 /path/to/full-backup.sh >> /var/log/full-backup.log 2>&1

8.3 灾难恢复

恢复脚本

#!/bin/bash # mongodb-restore.sh set -e BACKUP_FILE=$1 DB_NAME="myapp" TEMP_DIR="/tmp/mongodb_restore" if [ -z "$BACKUP_FILE" ]; then echo "用法: $0 " exit 1 fi echo "开始恢复 MongoDB 数据库..." # 创建临时目录 mkdir -p $TEMP_DIR # 解压备份文件 echo "解压备份文件..." tar -xzf $BACKUP_FILE -C $TEMP_DIR # 获取备份目录名 BACKUP_DIR=$(ls $TEMP_DIR) # 停止应用服务 echo "停止应用服务..." pm2 stop myapp # 恢复数据库 echo "恢复数据库..." mongorestore --db $DB_NAME --drop $TEMP_DIR/$BACKUP_DIR/$DB_NAME # 启动应用服务 echo "启动应用服务..." pm2 start myapp # 清理临时文件 rm -rf $TEMP_DIR echo "数据库恢复完成!"9. 故障排查与调试

9.1 常见问题诊断

内存泄漏检测

// memory-monitor.js const v8 = require('v8'); const fs = require('fs'); // 内存使用监控 const monitorMemory = () => { const usage = process.memoryUsage(); const heapStats = v8.getHeapStatistics(); console.log('Memory Usage:', { rss: `${Math.round(usage.rss / 1024 / 1024)}MB`, heapTotal: `${Math.round(usage.heapTotal / 1024 / 1024)}MB`, heapUsed: `${Math.round(usage.heapUsed / 1024 / 1024)}MB`, external: `${Math.round(usage.external / 1024 / 1024)}MB`, heapLimit: `${Math.round(heapStats.heap_size_limit / 1024 / 1024)}MB` }); // 如果内存使用超过阈值,生成堆快照 if (usage.heapUsed > 500 * 1024 * 1024) { // 500MB const snapshot = v8.writeHeapSnapshot(); console.log('Heap snapshot written to:', snapshot); } }; // 每分钟检查一次内存使用 setInterval(monitorMemory, 60000); module.exports = { monitorMemory };性能分析

// profiler.js const inspector = require('inspector'); const fs = require('fs'); const path = require('path'); class Profiler { constructor() { this.session = null; } start() { this.session = new inspector.Session(); this.session.connect(); // 启用 CPU 分析器 this.session.post('Profiler.enable'); this.session.post('Profiler.start'); console.log('CPU profiler started'); } async stop() { if (!this.session) return; return new Promise((resolve, reject) => { this.session.post('Profiler.stop', (err, { profile }) => { if (err) { reject(err); return; } const filename = `cpu-profile-${Date.now()}.cpuprofile`; const filepath = path.join(__dirname, '../profiles', filename); fs.writeFileSync(filepath, JSON.stringify(profile)); console.log('CPU profile saved to:', filepath); this.session.disconnect(); resolve(filepath); }); }); } } module.exports = Profiler;9.2 日志分析

错误追踪

// error-tracker.js const Sentry = require('@sentry/node'); // 初始化 Sentry Sentry.init({ dsn: process.env.SENTRY_DSN, environment: process.env.NODE_ENV, tracesSampleRate: 1.0, }); // 错误处理中间件 const errorHandler = (err, req, res, next) => { // 记录错误到 Sentry Sentry.captureException(err, { user: { id: req.user?.id, email: req.user?.email }, request: { method: req.method, url: req.url, headers: req.headers } }); // 记录到本地日志 console.error('Error:', { message: err.message, stack: err.stack, url: req.url, method: req.method, userId: req.user?.id, timestamp: new Date().toISOString() }); // 返回错误响应 const statusCode = err.statusCode || 500; res.status(statusCode).json({ error: { message: process.env.NODE_ENV === 'production' ? 'Internal Server Error' : err.message, status: statusCode } }); }; module.exports = { errorHandler };9.3 调试工具

远程调试配置

# 启动应用时启用调试模式 node --inspect=0.0.0.0:9229 src/index.js # 或者使用 PM2 pm2 start src/index.js --node-args="--inspect=0.0.0.0:9229"

调试脚本

// debug-utils.js const util = require('util'); const fs = require('fs'); // 深度日志记录 const deepLog = (obj, label = 'Debug') => { console.log(`\n=== ${label} ===`); console.log(util.inspect(obj, { depth: null, colors: true, showHidden: false })); console.log('='.repeat(label.length + 8)); }; // 性能计时器 class Timer { constructor(label) { this.label = label; this.start = process.hrtime.bigint(); } end() { const end = process.hrtime.bigint(); const duration = Number(end - this.start) / 1000000; // 转换为毫秒 console.log(`${this.label}: ${duration.toFixed(2)}ms`); return duration; } } // 内存快照 const takeMemorySnapshot = () => { const usage = process.memoryUsage(); const snapshot = { timestamp: new Date().toISOString(), pid: process.pid, memory: { rss: usage.rss, heapTotal: usage.heapTotal, heapUsed: usage.heapUsed, external: usage.external } }; const filename = `memory-snapshot-${Date.now()}.json`; fs.writeFileSync(filename, JSON.stringify(snapshot, null, 2)); console.log('Memory snapshot saved to:', filename); return snapshot; }; module.exports = { deepLog, Timer, takeMemorySnapshot };10. 最佳实践与总结

10.1 部署最佳实践

部署检查清单

环境管理策略

// config/environments.js const environments = { development: { port: 3000, database: { uri: 'mongodb://localhost:27017/myapp_dev', options: { useNewUrlParser: true, useUnifiedTopology: true } }, redis: { url: 'redis://localhost:6379' }, logging: { level: 'debug', console: true }, security: { cors: { origin: '*' } } }, staging: { port: process.env.PORT || 3000, database: { uri: process.env.MONGODB_URI, options: { useNewUrlParser: true, useUnifiedTopology: true, maxPoolSize: 5 } }, redis: { url: process.env.REDIS_URL }, logging: { level: 'info', console: false, file: true }, security: { cors: { origin: process.env.ALLOWED_ORIGINS?.split(',') || [] } } }, production: { port: process.env.PORT || 3000, database: { uri: process.env.MONGODB_URI, options: { useNewUrlParser: true, useUnifiedTopology: true, maxPoolSize: 10, serverSelectionTimeoutMS: 5000, socketTimeoutMS: 45000 } }, redis: { url: process.env.REDIS_URL, options: { retryDelayOnFailover: 100, maxRetriesPerRequest: 3 } }, logging: { level: 'warn', console: false, file: true, sentry: true }, security: { cors: { origin: process.env.ALLOWED_ORIGINS?.split(',') || [] }, rateLimit: { windowMs: 15 * 60 * 1000, max: 100 } } } }; module.exports = environments[process.env.NODE_ENV || 'development'];10.2 运维自动化

自动化部署脚本

#!/bin/bash # auto-deploy.sh set -e # 配置 APP_NAME="myapp" DEPLOY_USER="deploy" DEPLOY_HOST="your-server.com" DEPLOY_PATH="/opt/apps/$APP_NAME" BACKUP_PATH="/opt/backups/$APP_NAME" HEALTH_CHECK_URL="http://localhost:3000/health" # 颜色输出 RED='\033[0;31m' GREEN='\033[0;32m' YELLOW='\033[1;33m' NC='\033[0m' # No Color log() { echo -e "${GREEN}[$(date +'%Y-%m-%d %H:%M:%S')] $1${NC}" } warn() { echo -e "${YELLOW}[$(date +'%Y-%m-%d %H:%M:%S')] WARNING: $1${NC}" } error() { echo -e "${RED}[$(date +'%Y-%m-%d %H:%M:%S')] ERROR: $1${NC}" exit 1 } # 预检查 pre_deploy_check() { log "执行部署前检查..." # 检查 Git 状态 if [[ -n $(git status --porcelain) ]]; then error "工作目录不干净,请提交或暂存更改" fi # 检查测试 log "运行测试..." npm test || error "测试失败" # 检查构建 log "检查构建..." npm run build || error "构建失败" log "预检查完成" } # 备份当前版本 backup_current() { log "备份当前版本..." ssh $DEPLOY_USER@$DEPLOY_HOST " if [ -d $DEPLOY_PATH ]; then sudo mkdir -p $BACKUP_PATH sudo cp -r $DEPLOY_PATH $BACKUP_PATH/backup-$(date +%Y%m%d_%H%M%S) # 保留最近5个备份 sudo ls -t $BACKUP_PATH | tail -n +6 | xargs -r sudo rm -rf fi " log "备份完成" } # 部署新版本 deploy() { log "开始部署..." # 上传代码 rsync -avz --delete \ --exclude 'node_modules' \ --exclude '.git' \ --exclude 'logs' \ ./ $DEPLOY_USER@$DEPLOY_HOST:$DEPLOY_PATH/ # 远程部署命令 ssh $DEPLOY_USER@$DEPLOY_HOST " cd $DEPLOY_PATH npm ci --production npm run build sudo systemctl reload nginx pm2 reload ecosystem.config.js --env production " log "部署完成" } # 健康检查 health_check() { log "执行健康检查..." local max_attempts=30 local attempt=1 while [ $attempt -le $max_attempts ]; do if ssh $DEPLOY_USER@$DEPLOY_HOST "curl -f $HEALTH_CHECK_URL > /dev/null 2>&1"; then log "健康检查通过" return 0 fi warn "健康检查失败 (尝试 $attempt/$max_attempts)" sleep 10 ((attempt++)) done error "健康检查失败,部署可能有问题" } # 回滚 rollback() { warn "开始回滚..." ssh $DEPLOY_USER@$DEPLOY_HOST " latest_backup=\$(ls -t $BACKUP_PATH | head -n 1) if [ -n \"\$latest_backup\" ]; then sudo rm -rf $DEPLOY_PATH sudo cp -r $BACKUP_PATH/\$latest_backup $DEPLOY_PATH pm2 reload ecosystem.config.js --env production else echo 'No backup found for rollback' exit 1 fi " warn "回滚完成" } # 主流程 main() { log "开始自动化部署流程..." pre_deploy_check backup_current deploy if health_check; then log "部署成功完成!" else error "部署失败,开始回滚..." rollback health_check || error "回滚后健康检查仍然失败" warn "已回滚到之前版本" fi } # 捕获错误并回滚 trap 'error "部署过程中发生错误"' ERR # 执行主流程 main "$@"10.3 监控告警配置

Prometheus 告警规则

# alerts.yml groups: - name: myapp.rules rules: - alert: HighErrorRate expr: rate(myapp_http_requests_total{status_code=~"5.."}[5m]) > 0.1 for: 5m labels: severity: critical annotations: summary: "High error rate detected" description: "Error rate is {{ $value }} errors per second" - alert: HighResponseTime expr: histogram_quantile(0.95, rate(myapp_http_request_duration_seconds_bucket[5m])) > 1 for: 5m labels: severity: warning annotations: summary: "High response time detected" description: "95th percentile response time is {{ $value }} seconds" - alert: HighMemoryUsage expr: (myapp_process_resident_memory_bytes / 1024 / 1024) > 512 for: 10m labels: severity: warning annotations: summary: "High memory usage" description: "Memory usage is {{ $value }}MB" - alert: ServiceDown expr: up{job="myapp"} == 0 for: 1m labels: severity: critical annotations: summary: "Service is down" description: "MyApp service is not responding"告警通知配置

# alertmanager.yml global: smtp_smarthost: 'smtp.gmail.com:587' smtp_from: 'alerts@yourdomain.com' route: group_by: ['alertname'] group_wait: 10s group_interval: 10s repeat_interval: 1h receiver: 'web.hook' receivers: - name: 'web.hook' email_configs: - to: 'admin@yourdomain.com' subject: 'Alert: {{ .GroupLabels.alertname }}' body: | {{ range .Alerts }} Alert: {{ .Annotations.summary }} Description: {{ .Annotations.description }} {{ end }} slack_configs: - api_url: 'YOUR_SLACK_WEBHOOK_URL' channel: '#alerts' title: 'Alert: {{ .GroupLabels.alertname }}' text: | {{ range .Alerts }} {{ .Annotations.summary }} {{ .Annotations.description }} {{ end }}10.4 总结

Node.js 应用的部署与运维是一个复杂的系统工程,需要考虑多个方面:

关键要点

- 环境一致性:使用容器化技术确保开发、测试、生产环境的一致性

- 自动化流程:建立完整的 CI/CD 流水线,减少人工操作错误

- 监控体系:建立全面的监控和告警系统,及时发现和解决问题

- 安全防护:实施多层安全防护措施,保护应用和数据安全

- 性能优化:通过负载均衡、缓存、数据库优化等手段提升性能

- 故障恢复:建立完善的备份和恢复机制,确保业务连续性

技术选型建议

免责声明:我们致力于保护作者版权,注重分享,被刊用文章因无法核实真实出处,未能及时与作者取得联系,或有版权异议的,请联系管理员,我们会立即处理! 部分文章是来自自研大数据AI进行生成,内容摘自(百度百科,百度知道,头条百科,中国民法典,刑法,牛津词典,新华词典,汉语词典,国家院校,科普平台)等数据,内容仅供学习参考,不准确地方联系删除处理! 图片声明:本站部分配图来自人工智能系统AI生成,觅知网授权图片,PxHere摄影无版权图库和百度,360,搜狗等多加搜索引擎自动关键词搜索配图,如有侵权的图片,请第一时间联系我们。