华为昇腾Atlas 300I DUO ram64架构部署RagFlow

华为昇腾Atlas 300I DUO 欧拉22部署RagFlow

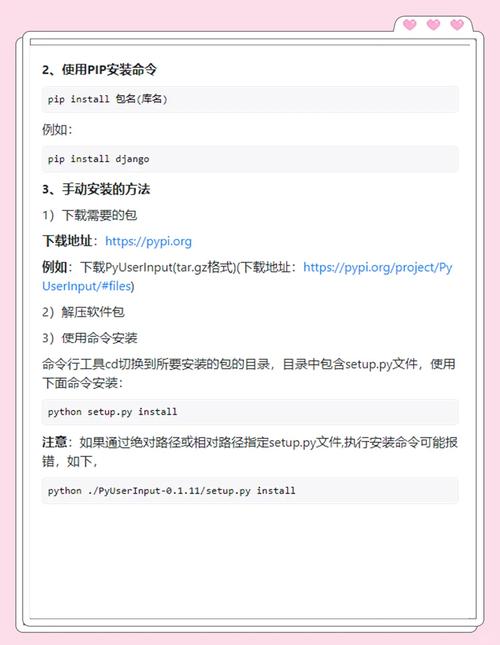

环境说明

- 操作系统 openEuler 22.03 LTS

- Atlas 300I DUO

- CPU ≥ 4 核

- 内存≥16GB

- 磁盘≥50GB

- Docker ≥ 24.0.0 和 Docker Compose ≥ v2.26.1

官网资源要求说明及步骤

前置配置

- 配置国内镜像端点(关键步骤)

# 在运行脚本前设置环境变量 export HF_ENDPOINT=https://hf-mirror.com # 永久生效配置 echo 'export HF_ENDPOINT=https://hf-mirror.com' >> ~/.bashrc # ModelScope,模型会下载到~/.cache/modelscope/hub默认路径下。如果需要修改 cache 目录,可以手动设置环境变量:MODELSCOPE_CACHE,完成设置后,模型将下载到该环境变量指定的目录中。 echo 'export MODELSCOPE_CACHE=/home/nvmedisk0/modelscopedownloadcache' >> ~/.bashrc

- 安装uv工具(Astral官方推荐方式)

# 使用curl安装(推荐) curl -Ls https://astro.ink/uv | sh # 或者使用pip安装(确保pip已更新) pip install --upgrade uv uv --version # 应该显示类似 uv 0.2.2 的版本信息 # 欧拉系统使用dnf包管理器安装证书 sudo dnf install ca-certificates -y # 更新证书数据库 sudo update-ca-trust

- docker 安装

按需选择版本,我安装的版本Docker version 26.1.4, build 5650f9b,Docker Compose version v2.35.0

# 安装 Docker curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun systemctl enable --now docker # 安装 docker-compose # v2.20.3 替换自己需要的版本 curl -L https://github.com/docker/compose/releases/download/v2.20.3/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose # 验证安装 docker -v docker-compose -v

- docker镜像站

构建Ragflow

官网原步骤

git clone https://github.com/infiniflow/ragflow.git cd ragflow/ # 这里使用国内镜像 uv run download_deps.py --china-mirrors # docker build -f Dockerfile.deps -t infiniflow/ragflow_deps . # 这一步如果遇到错误/chrome-linux64-121-0-6167-85" not found: not found,找不到文件夹,执行一下命令自行修复 # 如果是其他volumes目录不存在时,直接创建即可。 ## 检查当前目录文件列表(确认所有依赖文件已下载) ls -lh chromedriver* chrome* cl100k* libssl* tika* # 预期应看到以下文件(注意架构后缀): # - chromedriver-linux64-121-0-6167-85.zip # - chrome-linux64-121-0-6167-85.zip # - cl100k_base.tiktoken # - libssl1.1_1.1.1f-1ubuntu2_amd64.deb # - libssl1.1_1.1.1f-1ubuntu2_arm64.deb # - tika-server-standard-3.0.0.jar # - tika-server-standard-3.0.0.jar.md5 # 如果使用国内镜像导致文件名变化(示例修复): cp chrome-linux64.zip chrome-linux64-121-0-6167-85.zip cp chromedriver-linux64.zip chromedriver-linux64-121-0-6167-85.zip # 创建chromedriver解压目录(自动创建) unzip chromedriver-linux64-121-0-6167-85.zip -d chromedriver-linux64-121-0-6167-85 # 创建chrome解压目录(注意目录名前缀是chrome不是hrome) unzip chrome-linux64-121-0-6167-85.zip -d chrome-linux64-121-0-6167-85 # 建议先修改Dockerfile文件在执行这一步,参考下文的全部配置 # 建议先修改Dockerfile文件在执行这一步,参考下文的全部配置 # 建议先修改Dockerfile文件在执行这一步,参考下文的全部配置 docker build --build-arg LIGHTEN=1 -f Dockerfile -t infiniflow/ragflow:nightly-slim . # 这里可能遇见mount命令错误 #ERROR: failed to solve: failed to solve with frontend dockerfile.v0: failed to create LLB definition: Dockerfile parse error line 14: Unknown flag: mount # 执行以下步骤修改Dockerfile 文件。 # 1、第一行增加syntax=swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/docker/dockerfile:1.4-linuxarm64 # 备选命令,清理构建缓存 docker build --no-cache --build-arg LIGHTEN=1 -f Dockerfile -t infiniflow/ragflow:nightly-slim .

Dockerfile 修复mount命令替换国内镜像

修改了123、127 、131行复制文件

# 关键步骤应包含类似以下指令

COPY chromedriver-linux64-121-0-6167-85 /

COPY chrome-linux64-121-0-6167-85 /

RUN --mount=type=bind,source=/chrome-linux64-121-0-6167-85.zip,target=/chrome-linux64.zip \

unzip /chrome-linux64.zip && \

mv chrome-linux64 /opt/chrome && \

ln -s /opt/chrome/chrome /usr/local/bin/

RUN --mount=type=bind,source=/chromedriver-linux64-121-0-6167-85.zip,target=/chromedriver-linux64.zip \

unzip -j /chromedriver-linux64.zip chromedriver-linux64/chromedriver && \

mv chromedriver /usr/local/bin/ && \

rm -f /usr/bin/google-chrome

Dockerfile 全部配置供参考

# syntax=swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/docker/dockerfile:1.4-linuxarm64

# base stage

FROM swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/library/ubuntu:22.04-linuxarm64 AS base

USER root

SHELL ["/bin/bash", "-c"]

ARG NEED_MIRROR=1

ARG LIGHTEN=0

ENV LIGHTEN=${LIGHTEN}

WORKDIR /ragflow

# Copy models downloaded via download_deps.py

RUN mkdir -p /ragflow/rag/res/deepdoc /root/.ragflow

RUN --mount=type=bind,from=infiniflow/ragflow_deps:latest,source=/huggingface.co,target=/huggingface.co \

cp /huggingface.co/InfiniFlow/huqie/huqie.txt.trie /ragflow/rag/res/ && \

tar --exclude='.*' -cf - \

/huggingface.co/InfiniFlow/text_concat_xgb_v1.0 \

/huggingface.co/InfiniFlow/deepdoc \

| tar -xf - --strip-components=3 -C /ragflow/rag/res/deepdoc

RUN --mount=type=bind,from=infiniflow/ragflow_deps:latest,source=/huggingface.co,target=/huggingface.co \

if [ "$LIGHTEN" != "1" ]; then \

(tar -cf - \

/huggingface.co/BAAI/bge-large-zh-v1.5 \

/huggingface.co/maidalun1020/bce-embedding-base_v1 \

| tar -xf - --strip-components=2 -C /root/.ragflow) \

fi

# https://github.com/chrismattmann/tika-python

# This is the only way to run python-tika without internet access. Without this set, the default is to check the tika version and pull latest every time from Apache.

RUN --mount=type=bind,from=infiniflow/ragflow_deps:latest,source=/,target=/deps \

cp -r /deps/nltk_data /root/ && \

cp /deps/tika-server-standard-3.0.0.jar /deps/tika-server-standard-3.0.0.jar.md5 /ragflow/ && \

cp /deps/cl100k_base.tiktoken /ragflow/9b5ad71b2ce5302211f9c61530b329a4922fc6a4

ENV TIKA_SERVER_JAR="file:///ragflow/tika-server-standard-3.0.0.jar"

ENV DEBIAN_FRONTEND=noninteractive

# Setup apt

# Python package and implicit dependencies:

# opencv-python: libglib2.0-0 libglx-mesa0 libgl1

# aspose-slides: pkg-config libicu-dev libgdiplus libssl1.1_1.1.1f-1ubuntu2_amd64.deb

# python-pptx: default-jdk tika-server-standard-3.0.0.jar

# selenium: libatk-bridge2.0-0 chrome-linux64-121-0-6167-85

# Building C extensions: libpython3-dev libgtk-4-1 libnss3 xdg-utils libgbm-dev

RUN --mount=type=cache,id=ragflow_apt,target=/var/cache/apt,sharing=locked \

if [ "$NEED_MIRROR" == "1" ]; then \

sed -i 's|http://ports.ubuntu.com|http://mirrors.tuna.tsinghua.edu.cn|g' /etc/apt/sources.list; \

sed -i 's|http://archive.ubuntu.com|http://mirrors.tuna.tsinghua.edu.cn|g' /etc/apt/sources.list; \

fi; \

rm -f /etc/apt/apt.conf.d/docker-clean && \

echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' > /etc/apt/apt.conf.d/keep-cache && \

chmod 1777 /tmp && \

apt update && \

apt --no-install-recommends install -y ca-certificates && \

apt update && \

apt install -y libglib2.0-0 libglx-mesa0 libgl1 && \

apt install -y pkg-config libicu-dev libgdiplus && \

apt install -y default-jdk && \

apt install -y libatk-bridge2.0-0 && \

apt install -y libpython3-dev libgtk-4-1 libnss3 xdg-utils libgbm-dev && \

apt install -y libjemalloc-dev && \

apt install -y python3-pip pipx nginx unzip curl wget git vim less

RUN if [ "$NEED_MIRROR" == "1" ]; then \

pip3 config set global.index-url https://mirrors.aliyun.com/pypi/simple && \

pip3 config set global.trusted-host mirrors.aliyun.com; \

mkdir -p /etc/uv && \

echo "[[index]]" > /etc/uv/uv.toml && \

echo 'url = "https://mirrors.aliyun.com/pypi/simple"' >> /etc/uv/uv.toml && \

echo "default = true" >> /etc/uv/uv.toml; \

fi; \

pipx install uv

ENV PYTHONDONTWRITEBYTECODE=1 DOTNET_SYSTEM_GLOBALIZATION_INVARIANT=1

ENV PATH=/root/.local/bin:$PATH

# nodejs 12.22 on Ubuntu 22.04 is too old

RUN --mount=type=cache,id=ragflow_apt,target=/var/cache/apt,sharing=locked \

curl -fsSL https://deb.nodesource.com/setup_20.x | bash - && \

apt purge -y nodejs npm cargo && \

apt autoremove -y && \

apt update && \

apt install -y nodejs

# A modern version of cargo is needed for the latest version of the Rust compiler.

RUN apt update && apt install -y curl build-essential \

&& if [ "$NEED_MIRROR" == "1" ]; then \

# Use TUNA mirrors for rustup/rust dist files

export RUSTUP_DIST_SERVER="https://mirrors.tuna.tsinghua.edu.cn/rustup"; \

export RUSTUP_UPDATE_ROOT="https://mirrors.tuna.tsinghua.edu.cn/rustup/rustup"; \

echo "Using TUNA mirrors for Rustup."; \

fi; \

# Force curl to use HTTP/1.1

curl --proto '=https' --tlsv1.2 --http1.1 -sSf https://sh.rustup.rs | bash -s -- -y --profile minimal \

&& echo 'export PATH="/root/.cargo/bin:${PATH}"' >> /root/.bashrc

ENV PATH="/root/.cargo/bin:${PATH}"

RUN cargo --version && rustc --version

# Add msssql ODBC driver

# macOS ARM64 environment, install msodbcsql18.

# general x86_64 environment, install msodbcsql17.

RUN --mount=type=cache,id=ragflow_apt,target=/var/cache/apt,sharing=locked \

curl https://packages.microsoft.com/keys/microsoft.asc | apt-key add - && \

curl https://packages.microsoft.com/config/ubuntu/22.04/prod.list > /etc/apt/sources.list.d/mssql-release.list && \

apt update && \

arch="$(uname -m)"; \

if [ "$arch" = "arm64" ] || [ "$arch" = "aarch64" ]; then \

# ARM64 (macOS/Apple Silicon or Linux aarch64)

ACCEPT_EULA=Y apt install -y unixodbc-dev msodbcsql18; \

else \

# x86_64 or others

ACCEPT_EULA=Y apt install -y unixodbc-dev msodbcsql17; \

fi || \

{ echo "Failed to install ODBC driver"; exit 1; }

# Add dependencies of selenium

# 关键步骤应包含类似以下指令

COPY chromedriver-linux64-121-0-6167-85 /

COPY chrome-linux64-121-0-6167-85 /

RUN --mount=type=bind,source=/chrome-linux64-121-0-6167-85.zip,target=/chrome-linux64.zip \

unzip /chrome-linux64.zip && \

mv chrome-linux64 /opt/chrome && \

ln -s /opt/chrome/chrome /usr/local/bin/

RUN --mount=type=bind,source=/chromedriver-linux64-121-0-6167-85.zip,target=/chromedriver-linux64.zip \

unzip -j /chromedriver-linux64.zip chromedriver-linux64/chromedriver && \

mv chromedriver /usr/local/bin/ && \

rm -f /usr/bin/google-chrome

# https://forum.aspose.com/t/aspose-slides-for-net-no-usable-version-of-libssl-found-with-linux-server/271344/13

# aspose-slides on linux/arm64 is unavailable

RUN --mount=type=bind,from=infiniflow/ragflow_deps:latest,source=/,target=/deps \

if [ "$(uname -m)" = "x86_64" ]; then \

dpkg -i /deps/libssl1.1_1.1.1f-1ubuntu2_amd64.deb; \

elif [ "$(uname -m)" = "aarch64" ]; then \

dpkg -i /deps/libssl1.1_1.1.1f-1ubuntu2_arm64.deb; \

fi

# builder stage

FROM base AS builder

USER root

WORKDIR /ragflow

# install dependencies from uv.lock file

COPY pyproject.toml uv.lock ./

# https://github.com/astral-sh/uv/issues/10462

# uv records index url into uv.lock but doesn't failover among multiple indexes

RUN --mount=type=cache,id=ragflow_uv,target=/root/.cache/uv,sharing=locked \

if [ "$NEED_MIRROR" == "1" ]; then \

sed -i 's|pypi.org|mirrors.aliyun.com/pypi|g' uv.lock; \

else \

sed -i 's|mirrors.aliyun.com/pypi|pypi.org|g' uv.lock; \

fi; \

if [ "$LIGHTEN" == "1" ]; then \

uv sync --python 3.10 --frozen; \

else \

uv sync --python 3.10 --frozen --all-extras; \

fi

COPY web web

COPY docs docs

RUN --mount=type=cache,id=ragflow_npm,target=/root/.npm,sharing=locked \

cd web && npm install && npm run build

COPY .git /ragflow/.git

RUN version_info=$(git describe --tags --match=v* --first-parent --always); \

if [ "$LIGHTEN" == "1" ]; then \

version_info="$version_info slim"; \

else \

version_info="$version_info full"; \

fi; \

echo "RAGFlow version: $version_info"; \

echo $version_info > /ragflow/VERSION

# production stage

FROM base AS production

USER root

WORKDIR /ragflow

# Copy Python environment and packages

ENV VIRTUAL_ENV=/ragflow/.venv

COPY --from=builder ${VIRTUAL_ENV} ${VIRTUAL_ENV}

ENV PATH="${VIRTUAL_ENV}/bin:${PATH}"

ENV PYTHONPATH=/ragflow/

COPY web web

COPY api api

COPY conf conf

COPY deepdoc deepdoc

COPY rag rag

COPY agent agent

COPY graphrag graphrag

COPY agentic_reasoning agentic_reasoning

COPY pyproject.toml uv.lock ./

COPY docker/service_conf.yaml.template ./conf/service_conf.yaml.template

COPY docker/entrypoint.sh ./

RUN chmod +x ./entrypoint*.sh

# Copy compiled web pages

COPY --from=builder /ragflow/web/dist /ragflow/web/dist

COPY --from=builder /ragflow/VERSION /ragflow/VERSION

ENTRYPOINT ["./entrypoint.sh"]

启动ragflow

修改docker-compose.yml

修改了暴露端口为81,extra_hosts:为host.docker.internal:172.17.0.1

include:

- ./docker-compose-base.yml

# To ensure that the container processes the locally modified `service_conf.yaml.template` instead of the one included in its image, you need to mount the local `service_conf.yaml.template` to the container.

services:

ragflow:

depends_on:

mysql:

condition: service_healthy

image: ${RAGFLOW_IMAGE}

container_name: ragflow-server

ports:

- ${SVR_HTTP_PORT}:9380

- 81:80

- 444:443

- 5678:5678

- 5679:5679

volumes:

- ./ragflow-logs:/ragflow/logs

- ./nginx/ragflow.conf:/etc/nginx/conf.d/ragflow.conf

- ./nginx/proxy.conf:/etc/nginx/proxy.conf

- ./nginx/nginx.conf:/etc/nginx/nginx.conf

- ../history_data_agent:/ragflow/history_data_agent

- ./service_conf.yaml.template:/ragflow/conf/service_conf.yaml.template

env_file: .env

environment:

- TZ=${TIMEZONE}

- HF_ENDPOINT=${HF_ENDPOINT}

- MACOS=${MACOS}

networks:

- ragflow

restart: on-failure

# https://docs.docker.com/engine/daemon/prometheus/#create-a-prometheus-configuration

# If you're using Docker Desktop, the --add-host flag is optional. This flag makes sure that the host's internal IP gets exposed to the Prometheus container.

extra_hosts:

#- "host.docker.internal:host-gateway"

- "host.docker.internal:172.17.0.1"

# executor:

# depends_on:

# mysql:

# condition: service_healthy

# image: ${RAGFLOW_IMAGE}

# container_name: ragflow-executor

# volumes:

# - ./ragflow-logs:/ragflow/logs

# - ./nginx/ragflow.conf:/etc/nginx/conf.d/ragflow.conf

# env_file: .env

# environment:

# - TZ=${TIMEZONE}

# - HF_ENDPOINT=${HF_ENDPOINT}

# - MACOS=${MACOS}

# entrypoint: "/ragflow/entrypoint_task_executor.sh 1 3"

# networks:

# - ragflow

# restart: on-failure

# # https://docs.docker.com/engine/daemon/prometheus/#create-a-prometheus-configuration

# # If you're using Docker Desktop, the --add-host flag is optional. This flag makes sure that the host's internal IP gets exposed to the Prometheus container.

# extra_hosts:

# - "host.docker.internal:host-gateway"

修改docker-compose-base.yml

修改了镜像,全部使用国内arm64镜像

修改了redis服务名,.env中同步修改

services:

es01:

container_name: ragflow-es-01

profiles:

- elasticsearch

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/library/elasticsearch:8.11.3-linuxarm64

volumes:

- esdata01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

env_file: .env

environment:

- node.name=es01

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=false

- discovery.type=single-node

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false

- xpack.security.transport.ssl.enabled=false

- cluster.routing.allocation.disk.watermark.low=5gb

- cluster.routing.allocation.disk.watermark.high=3gb

- cluster.routing.allocation.disk.watermark.flood_stage=2gb

- TZ=${TIMEZONE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test: ["CMD-SHELL", "curl http://localhost:9200"]

interval: 10s

timeout: 10s

retries: 120

networks:

- ragflow

restart: on-failure

infinity:

container_name: ragflow-infinity

profiles:

- infinity

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/michaelf34/infinity:latest-linuxarm64

volumes:

- infinity_data:/var/infinity

- ./infinity_conf.toml:/infinity_conf.toml

command: ["-f", "/infinity_conf.toml"]

ports:

- ${INFINITY_THRIFT_PORT}:23817

- ${INFINITY_HTTP_PORT}:23820

- ${INFINITY_PSQL_PORT}:5432

env_file: .env

environment:

- TZ=${TIMEZONE}

mem_limit: ${MEM_LIMIT}

ulimits:

nofile:

soft: 500000

hard: 500000

networks:

- ragflow

healthcheck:

test: ["CMD", "curl", "http://localhost:23820/admin/node/current"]

interval: 10s

timeout: 10s

retries: 120

restart: on-failure

mysql:

# mysql:5.7 linux/arm64 image is unavailable.

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/mysql:8.0.39-linuxarm64

container_name: ragflow-mysql

env_file: .env

environment:

- MYSQL_ROOT_PASSWORD=${MYSQL_PASSWORD}

- TZ=${TIMEZONE}

command:

--max_connections=1000

--character-set-server=utf8mb4

--collation-server=utf8mb4_unicode_ci

--default-authentication-plugin=mysql_native_password

--tls_version="TLSv1.2,TLSv1.3"

--init-file /data/application/init.sql

--binlog_expire_logs_seconds=604800

ports:

- ${MYSQL_PORT}:3306

volumes:

- mysql_data:/var/lib/mysql

- ./init.sql:/data/application/init.sql

networks:

- ragflow

healthcheck:

test: ["CMD", "mysqladmin" ,"ping", "-uroot", "-p${MYSQL_PASSWORD}"]

interval: 10s

timeout: 10s

retries: 3

restart: on-failure

minio:

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/quay.io/minio/minio:RELEASE.2023-12-20T01-00-02Z-linuxarm64

container_name: ragflow-minio

command: server --console-address ":9001" /data

ports:

- ${MINIO_PORT}:9000

- ${MINIO_CONSOLE_PORT}:9001

env_file: .env

environment:

- MINIO_ROOT_USER=${MINIO_USER}

- MINIO_ROOT_PASSWORD=${MINIO_PASSWORD}

- TZ=${TIMEZONE}

volumes:

- minio_data:/data

networks:

- ragflow

restart: on-failure

ragflow-redis:

# swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/valkey/valkey:8

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/valkey/valkey:8-linuxarm64

container_name: ragflow-redis

command: redis-server --requirepass ${REDIS_PASSWORD} --maxmemory 128mb --maxmemory-policy allkeys-lru

env_file: .env

ports:

- ${REDIS_PORT}:6379

volumes:

- redis_data:/data

networks:

- ragflow

restart: on-failure

volumes:

esdata01:

driver: local

infinity_data:

driver: local

mysql_data:

driver: local

minio_data:

driver: local

redis_data:

driver: local

networks:

ragflow:

driver: bridge

修改service_conf.yaml.template

修改默认密码等

ragflow:

host: ${RAGFLOW_HOST:-0.0.0.0}

http_port: 9380

mysql:

name: '${MYSQL_DBNAME:-rag_flow}'

user: '${MYSQL_USER:-root}'

password: '${MYSQL_PASSWORD:-Yunlian@rag_flow}'

host: '${MYSQL_HOST:-mysql}'

port: 3306

max_connections: 100

stale_timeout: 30

minio:

user: '${MINIO_USER:-rag_flow}'

password: '${MINIO_PASSWORD:-Yunlian@rag_flow}'

host: '${MINIO_HOST:-minio}:9000'

es:

hosts: 'http://${ES_HOST:-es01}:9200'

username: '${ES_USER:-elastic}'

password: '${ELASTIC_PASSWORD:-Yunlian@rag_flow}'

infinity:

uri: '${INFINITY_HOST:-infinity}:23817'

db_name: 'default_db'

redis:

db: 1

password: '${REDIS_PASSWORD:-Yunlian@rag_flow}'

host: '${REDIS_HOST:-redis}:6379'

修改.env

修改默认密码等

# The type of doc engine to use.

# Available options:

# - `elasticsearch` (default)

# - `infinity` (https://github.com/infiniflow/infinity)

DOC_ENGINE=${DOC_ENGINE:-elasticsearch}

# ------------------------------

# docker env var for specifying vector db type at startup

# (based on the vector db type, the corresponding docker

# compose profile will be used)

# ------------------------------

COMPOSE_PROFILES=${DOC_ENGINE}

# The version of Elasticsearch. 这里虽然改了,但未使用

STACK_VERSION=8.11.3-linuxarm64

# The hostname where the Elasticsearch service is exposed

ES_HOST=es01

# The port used to expose the Elasticsearch service to the host machine,

# allowing EXTERNAL access to the service running inside the Docker container.

ES_PORT=1200

# The password for Elasticsearch.

ELASTIC_PASSWORD=Yunlian@rag_flow

# The port used to expose the Kibana service to the host machine,

# allowing EXTERNAL access to the service running inside the Docker container.

KIBANA_PORT=6601

KIBANA_USER=rag_flow

KIBANA_PASSWORD=Yunlian@rag_flow

# The maximum amount of the memory, in bytes, that a specific Docker container can use while running.

# Update it according to the available memory in the host machine.

MEM_LIMIT=8073741824

# The hostname where the Infinity service is exposed

INFINITY_HOST=infinity

# Port to expose Infinity API to the host

INFINITY_THRIFT_PORT=23817

INFINITY_HTTP_PORT=23820

INFINITY_PSQL_PORT=5432

# The password for MySQL.

MYSQL_PASSWORD=Yunlian@rag_flow

# The hostname where the MySQL service is exposed

MYSQL_HOST=mysql

# The database of the MySQL service to use

MYSQL_DBNAME=rag_flow

# The port used to expose the MySQL service to the host machine,

# allowing EXTERNAL access to the MySQL database running inside the Docker container.

MYSQL_PORT=5455

# The hostname where the MinIO service is exposed

MINIO_HOST=minio

# The port used to expose the MinIO console interface to the host machine,

# allowing EXTERNAL access to the web-based console running inside the Docker container.

MINIO_CONSOLE_PORT=9001

# The port used to expose the MinIO API service to the host machine,

# allowing EXTERNAL access to the MinIO object storage service running inside the Docker container.

MINIO_PORT=9000

# The username for MinIO.

# When updated, you must revise the `minio.user` entry in service_conf.yaml accordingly.

MINIO_USER=rag_flow

# The password for MinIO.

# When updated, you must revise the `minio.password` entry in service_conf.yaml accordingly.

MINIO_PASSWORD=Yunlian@rag_flow

# The hostname where the Redis service is exposed

REDIS_HOST=ragflow-redis

# The port used to expose the Redis service to the host machine,

# allowing EXTERNAL access to the Redis service running inside the Docker container.

REDIS_PORT=26379

# The password for Redis.

REDIS_PASSWORD=Yunlian@rag_flow

# The port used to expose RAGFlow's HTTP API service to the host machine,

# allowing EXTERNAL access to the service running inside the Docker container.

SVR_HTTP_PORT=9380

# The RAGFlow Docker image to download.

# Defaults to the v0.17.2-slim edition, which is the RAGFlow Docker image without embedding models.

RAGFLOW_IMAGE=infiniflow/ragflow:nightly-slim-yl

#

# To download the RAGFlow Docker image with embedding models, uncomment the following line instead:

# RAGFLOW_IMAGE=infiniflow/ragflow:v0.17.2

#

# The Docker image of the v0.17.2 edition includes built-in embedding models:

# - BAAI/bge-large-zh-v1.5

# - maidalun1020/bce-embedding-base_v1

#

# If you cannot download the RAGFlow Docker image:

#

# - For the `nightly-slim` edition, uncomment either of the following:

# RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:nightly-slim

# RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:nightly-slim

#

# - For the `nightly` edition, uncomment either of the following:

# RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:nightly

# RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:nightly

# The local time zone.

TIMEZONE='Asia/Shanghai'

# Uncomment the following line if you have limited access to huggingface.co:

# HF_ENDPOINT=https://hf-mirror.com

# Optimizations for MacOS

# Uncomment the following line if your operating system is MacOS:

# MACOS=1

# The maximum file size limit (in bytes) for each upload to your knowledge base or File Management.

# To change the 1GB file size limit, uncomment the line below and update as needed.

# MAX_CONTENT_LENGTH=1073741824

# After updating, ensure `client_max_body_size` in nginx/nginx.conf is updated accordingly.

# Note that neither `MAX_CONTENT_LENGTH` nor `client_max_body_size` sets the maximum size for files uploaded to an agent.

# See https://ragflow.io/docs/dev/begin_component for details.

# The log level for the RAGFlow's owned packages and imported packages.

# Available level:

# - `DEBUG`

# - `INFO` (default)

# - `WARNING`

# - `ERROR`

# For example, following line changes the log level of `ragflow.es_conn` to `DEBUG`:

# LOG_LEVELS=ragflow.es_conn=DEBUG

# aliyun OSS configuration

# STORAGE_IMPL=OSS

# ACCESS_KEY=xxx

# SECRET_KEY=eee

# ENDPOINT=http://oss-cn-hangzhou.aliyuncs.com

# REGION=cn-hangzhou

# BUCKET=ragflow65536

# user registration switch

REGISTER_ENABLED=1

启动docker

# 在ragflow 的docker目录下执行 docker compose up -d # 出现以下内容 WARN[0000] The "HF_ENDPOINT" variable is not set. Defaulting to a blank string. WARN[0000] The "MACOS" variable is not set. Defaulting to a blank string. [+] Running 10/14 ⠦ es01 [⣀⣿⣿⣿⠀⣿⣿⣿⣿⡀] 28.89MB / 452MB Pulling 15.6s ⠦ a5319f8e5f3f Downloading [==================> ] 10.03MB/27.2MB 14.6s ✔ 51be98b1d6e3 Download complete 11.2s ✔ db7cc25a9ed6 Download complete 1.4s ✔ 4f4fb700ef54 Download complete 3.4s ⠦ 16f72b7d4c4e Downloading [=> ] 11.35MB/417.2MB 14.6s ✔ 3530ea432109 Download complete 11.5s ✔ f8d0e1d2c4e7 Download complete 12.2s ✔ 9aae14f29515 Download complete 13.2s ✔ fd08817fddb3 Download complete # 其他常用命令 # 检查服务状态 docker compose ps # 停止 docker compose down

遇到问题汇总

1.mount命令错误

#ERROR: failed to solve: failed to solve with frontend dockerfile.v0: failed to create LLB definition: Dockerfile parse error line 14: Unknown flag: mount # 执行以下步骤修改Dockerfile 文件。 # 1、第一行增加syntax=swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/docker/dockerfile:1.4-linuxarm64

uv命令不存在

# 使用curl安装(推荐) curl -Ls https://astro.ink/uv | sh # 或者使用pip安装(确保pip已更新) pip install --upgrade uv

huggingface.co 连不上

换国内镜像即可

“/chrome-linux64-121-0-6167-85” not found: not found

参考构建ragflow中的说明

(图片来源网络,侵删)

(图片来源网络,侵删)

(图片来源网络,侵删)

免责声明:我们致力于保护作者版权,注重分享,被刊用文章因无法核实真实出处,未能及时与作者取得联系,或有版权异议的,请联系管理员,我们会立即处理! 部分文章是来自自研大数据AI进行生成,内容摘自(百度百科,百度知道,头条百科,中国民法典,刑法,牛津词典,新华词典,汉语词典,国家院校,科普平台)等数据,内容仅供学习参考,不准确地方联系删除处理! 图片声明:本站部分配图来自人工智能系统AI生成,觅知网授权图片,PxHere摄影无版权图库和百度,360,搜狗等多加搜索引擎自动关键词搜索配图,如有侵权的图片,请第一时间联系我们。